Face card, no cash, no credit / Yes God, don't speak, you said it

Look at you / Skip the application interview

- Troye Sivan, “One of Your Girls”

Machine intelligence that seemed magical last year is completely normal today. In May 2025, ChatGPT enthusiasts casually play GeoGuessr, pinpointing where in Nepal a picture of scattered rocks was taken. Consumer apps still refuse requests to analyze human faces, but the models already run forbidden arithmetic on the “mirror of the soul.” Brace yourself. The golden age of physiognomy has only just begun!

Train a model to spot one trait and it will often nail another, even more precisely. That’s the power of pattern recognition pointing to underlying realities. A model trained to assess feminine faces, for instance, outperforms humans at evaluating aggression and happiness. Through simple photographs, deep neural networks (DNNs) uncover patterns in our physical world too subtle for human eyes.1

Humans already like to judge who is gay or straight, but achieve only 61% accuracy for men and 54% for women. DNNs are better at reading face cards. In a 2018 study, Wang and Kosinski tested their model on dating site images, correctly identifying gay men 81% of the time and lesbians 71% of the time. Their results support the theory that prenatal hormones cause gender-atypical physical features in homosexuals.2

Kosinski returned in 2021 to correctly identify political orientation from real-life images with a 72% accuracy across the Anglosphere, outperforming both humans (55%) and long personality questionnaires (66%), even controlling for age, gender and ethnicity. While liberals typically faced cameras more directly and expressed surprise (soyface!), conservatives tilted their heads and expressed disgust more frequently.3

Modern Tools for Ancient Problems

Three types of AI shape 2025 technology in different ways. LLMs map relationships between words, and recommendation algorithms decode our hidden preferences. Convolutional Neural Networks (CNNs), used in facial recognition, scan images through many filter layers, like our pre-conscious visual cortex. Small differences in hundreds or thousands of individual measurements combine into predictive patterns.

Chinese scientists in 2016 hit a remarkable 89.5% accuracy with CNNs that sorted home-grown mugshots into criminal and non-criminal. Differences in lip curvature, eye spacing, and nose-mouth angles emerged. Criminal faces showed increased dysmorphology (abnormal features since birth), a finding the researchers called a “law of normality” for non-criminal appearances.4

Why are faces and personality linked? Shared genetics shape both temperament and facial structure from birth, directly and through hormones like testosterone, which influence behavior and skull morphology. Social feedback plays a role too: attractive people get more positive reinforcement and grow more extraverted. Over time, smiles and frowns sculpt muscles and leave wrinkles.5 The browline keeps the score.

We all do, whether we like it or not. Snap judgments come daily: do you bang a stranger at the orgy, or glove up before delivering first aid? Predictive models make our visual risk cues more explicit. A 2019 experiment asked participants to decide whether a photographed person had HIV. Deep learning surfaced the unspoken signals people already use: red eyes, a coquettish gaze, an unkempt look.6

Stop, Frisk, Scan: Bayesians Save Lives

Race may be a myth at the level of nations, but it’s legible to doctors and gravediggers. Forensic anthropologists can peg a skull’s race with 80% accuracy using just a few measurements. East Asians have wide skulls and flat cheekbones. Africans show round eye sockets and forward jaws. Europeans display long heads and teardrop nasal cavities. These differences are obvious to trained eyes,7 but what about closer groups?

People distinguish Chinese, Japanese, and Korean faces at only 39% accuracy, barely above random guessing. But a 2016 deep learning model trained on 40,000 Asian celebrity photos hit 75% accuracy. It exposed underlying patterns, like how Chinese are most likely to have bushy eyebrows, and that Japanese have more prominent eye bags.8 Computer-aided deep physiognomy traces facial features back to the genome.

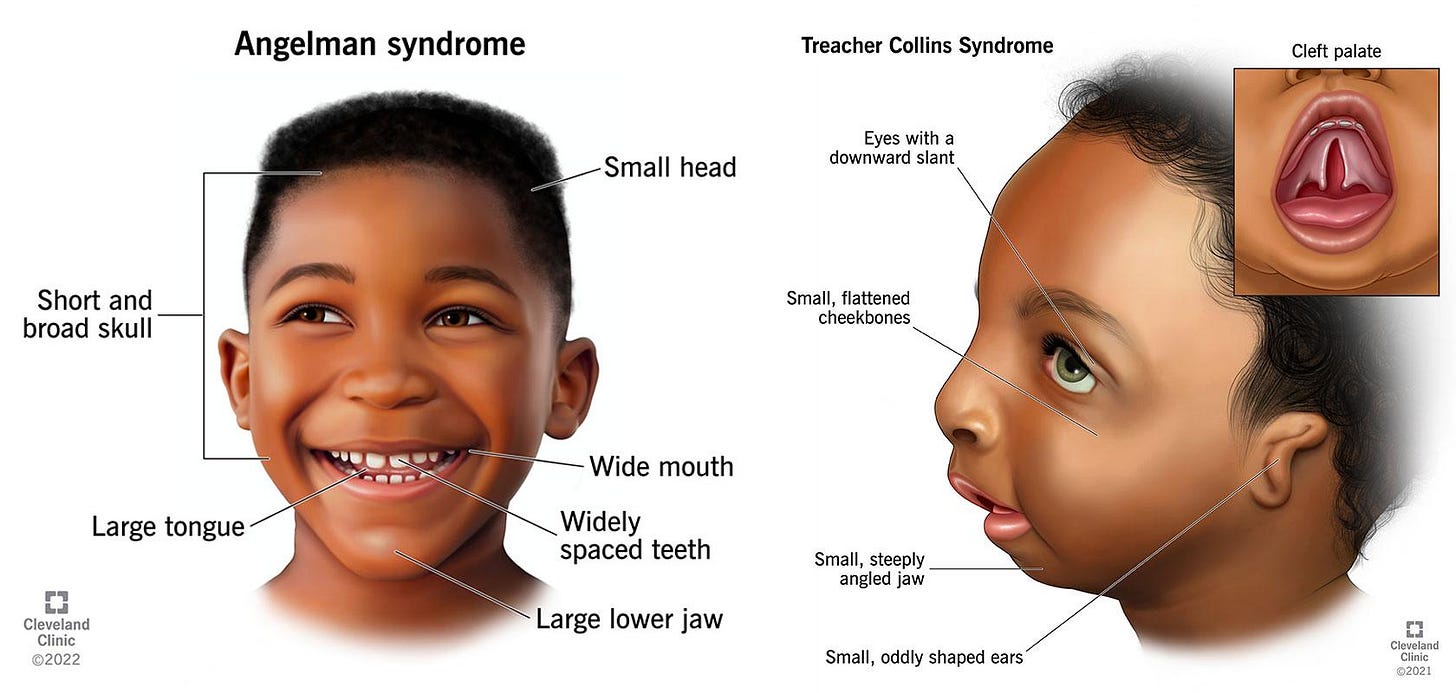

Deep physiognomy already serves medical diagnosis. Doctors identify conditions like Down syndrome or Angelman syndrome from facial features. Since 2019, clinicians can also use Face2Gene, an app powered by the DeepGestalt AI system, to predict genetic mutations from photographs. Across 200 syndromes, the system placed the correct diagnosis in its top 10 suggestions 91% of the time.9

Ethnic profiling improves disease prediction via Bayesian logic. Weak Fragile X signals carry more weight in Ashkenazi Jews, where the syndrome is common. Strong sickle cell signals matter less in East Asians, where the disease is rare. Human doctors intuitively calibrate genetic risks to reduce false positives. AI just does it better, and with a paper trail.

You sound lonely. I can fix that.

There’s no reason deep physiognomy should stop at the face. Researchers have trained AI to detect Parkinson’s disease from voice recordings with 99% accuracy. The disease distorts motor control of the vocal cords, producing telltale tremors, reduced volume, and flattened pitch. Even in healthy individuals, voiceprint is a personality signal: extraverts tend to speak louder, faster, and with more pitch variation than introverts.10

Age, gender, and sexual orientation leave audible fingerprints. AI quantifies them, and pushes physiognomy deeper into the throat, inferring height and facial structure from voice alone. Regional accent recognition feeds ethnicity estimation, which sharpens disease prediction. Deep physiognomy, like frontier AI in general, is multimodal, fusing audio, text, and image into composite judgments. This is bad news for liars.

Multimodal physiognomy can cross-check every signal. Humans often get misled by a dominant impression, trusting a friendly face even when the tone is menacing. The model weighs face, voice, and gesture together, flags discrepancies, and builds an ensemble stronger than any single cue.11 Whereas humans create first impressions, deep learning models create first compositions.

The noble lie of our time is “holistic judgment.” We don’t eyeball the bill at grocery checkout: we scan and verify. Yet in parole and admissions, institutions still divine risk and merit by intuition. Bodycams, Ring footage, and forensic logs empower honest people to resist sanctioned myths like “systemic racism,” and compute takes the data further. We don’t need a machine god to save us. We need a machine auditor.

Keles, Umit, et al. “A Cautionary Note on Predicting Social Judgments from Faces with Deep Neural Networks.” Affective Science, vol. 2, 2021, pp. 438–454.

When researchers deliberately modified images by altering colors, removing hair, or changing luminance, object-recognition models showed significant accuracy drops averaging 0.28 across attributes, while face identification models maintained performance with only a minimal 0.05 decline. A key advantage for real-world deployment!

Wang, Y., & Kosinski, M. (2018). “Deep neural networks are more accurate than humans at detecting sexual orientation from facial images.” Journal of Personality and Social Psychology, 114(2), 246–257.

Analysis revealed that gay men typically displayed gender-atypical features including narrower jaws, longer noses, larger foreheads, less facial hair, and lighter skin. Lesbians showed masculine-typical features like larger jaws, smaller foreheads, darker hair, less eye makeup, and different grooming styles. Even using just the contour (the outer shape of the face) yielded 75% accuracy for men and 63% for women, suggesting fixed facial structure alone significantly signals sexual orientation, separate from expressions, grooming or other changeable features.

Kosinski, M. (2021). “Facial recognition technology can expose political orientation from naturalistic facial images”. Scientific Reports, 11(1), 100.

He examined over 1 million faces across the US, UK, and Canada using an open-source algorithm rather than developing specialized political classification tools. This research demonstrated cross-sample validity, with models trained on one country’s dating profiles successfully identifying political orientation in other countries and platforms.

Wu, Xiaolin, and Xi Zhang. “Responses to Critiques on Machine Learning of Criminality Perceptions (Addendum of arXiv:1611.04135).” arXiv preprint arXiv:1611.04135v3, 26 May 2017.

Critics often misunderstood the researchers’ intentions and overlooked crucial statistical context. Wu and Zhang clearly explained the base rate fallacy affecting interpretation of their results: despite the 89% true positive rate, the actual probability of criminality for someone flagged by their system would be merely 4.39% due to China's low crime rate (0.36%). Their response paper meticulously addressed methodological concerns, demonstrating through randomized control trials that the classifiers couldn’t distinguish between randomly labeled data, confirming the results weren’t due to overfitting.

Kachur, Alexander, et al. “Assessing the Big Five Personality Traits Using Real-Life Static Facial Images.” Scientific Reports, vol. 10, 2020, article 8487.

Their most significant finding was that conscientiousness was the most accurately predicted trait from facial images for both genders (correlations of 0.360 for men and 0.335 for women). This aligns with evolutionary theories suggesting traits most relevant for cooperation would be more visibly reflected in facial features, as conscientiousness is crucial for social coordination and group cohesion. Genes account for 30-60% of the variation in Big Five traits like conscientiousness.

Schmälzle, Ralf, et al. “Visual Cues That Predict Intuitive Risk Perception in the Case of HIV.” PLOS ONE, vol. 14, no. 2, 2019, article e0211770.

Risk-enhancing cues included unconventional appearance, worn facial features, body adornment, coquettish gaze, and reddened eyes. Safety-signaling cues included friendly expressions, muscular build, average facial features, height, and heavier body type. People with negative emotional expressions (serious, worried, angry) were judged riskier, while those with positive expressions (smiling, happy) were perceived as safer. The strongest predictors of perceived HIV risk were impressions of irresponsibility, lack of education, and selfishness. Trustworthiness strongly predicted safety perceptions, while health and attractiveness had minimal correlation with HIV risk judgments.

Wade, Nicholas. "The Human Experiment." A Troublesome Inheritance: Genes, Race and Human History, Penguin Books, 2014, pp. 58-73.

No single skeletal feature definitively determines racial classification; rather, combinations of features that appear more frequently in one group allow for accurate identification. This has created tension among anthropologists who reject racial categorization yet acknowledge these measurable differences. Many researchers now avoid the term ‘race’ while continuing to study these distinctions, using alternative terminology like ‘ancestry,’ ‘population structure,’ or ‘ancestry informative markers’ (AIMs).

Wang, Yu, et al. “Do They All Look the Same? Deciphering Chinese, Japanese and Koreans by Fine-Grained Deep Learning.” arXiv preprint, 2016.

Pantel, Jean Tori, et al. “Efficiency of Computer-Aided Facial Phenotyping (DeepGestalt) in Individuals With and Without a Genetic Syndrome: Diagnostic Accuracy Study.” Journal of Medical Internet Research, vol. 22, no. 10, 22 Oct. 2020, e19263, doi:10.2196/19263.

Lukac, Martin. “Speech-Based Personality Prediction Using Deep Learning with Acoustic and Linguistic Embeddings.” Scientific Reports, vol. 14, no. 30149, 2024.

The AI’s predictions of the speakers’ self-reported personalities showed significant correlations – e.g. it could estimate neuroticism with r ≈ 0.39 and extraversion with r ≈ 0.26

Nagaraja, G. S., and Revathi Santhosh. “Multimodal Personality Prediction Using Deep Learning Techniques.” 7th International Conference on Computational Systems and Information Technology for Sustainable Solutions (CSITSS), IEEE, 2023, doi:10.1109/CSITSS60515.2023.10334177.